Transition From Pandas to Spark Koalas to rescue

Problem Statement at hand Pandas to Spark

- Pandas to Spark data size grows from MB →GB →Tb . The processing time goes from SEC →Minutes →Hours and dam our working python scripts start crashing with (pandas out of memory) exceptions.

- The team started looking for an more enterprise and scalable approach to handle the big data surge Spark and its family became defact standard solutions for such problems.

- But wait Data bricks work with either Pyspark or Scala.

- So do you think we need to rewrite all our Pandas code to Pyspark and keep doing so for the new Data Science projects as well? Well, the answer is no.

- In May 2019 the researchers of Spark introduced KOALAS(not the cute little lazy animals)to the open-source community.

Let me describe a typical Data Scientists journey

- University/MOOC as students rely on Pandas.

- Analyse Small Data Set interns/freshers rely on Pandas.

- Well in the Industry for some years/seniors started analysis on big datasets relying on the Spark Data frame.

- But Pyspark has a very different set of APIs compared to single-node python packages such as pandas so do we see an steep learning curve here? that too when we have deliverables at hand.

- These problems above led to the development of KOALAS.

- This framework benefits both the Pandas and Spark users by combining the two hence providing greater value much faster to the organisations.

- Koalas is a pure Open Source Python Library that aims at providing Pandas API on top of Apache Spark.

- Koalas unifies the 2 ecosystems with a familiar API.

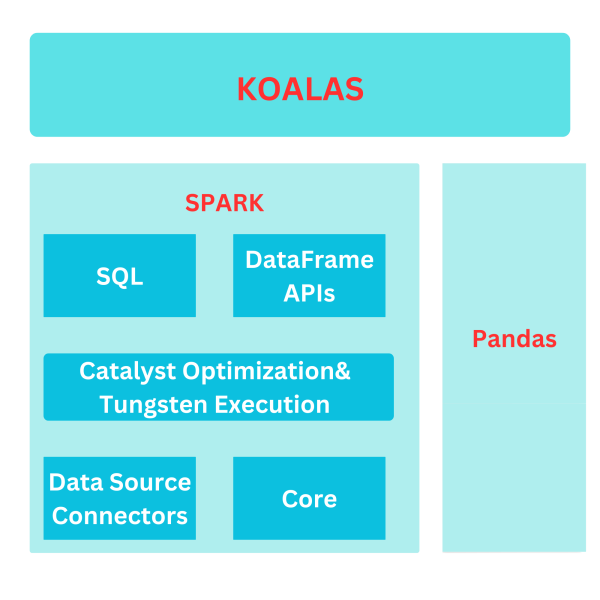

Koalas Architecture

Let’s see the action with an example:

PANDAS Code Pandas to Spark

Pandas_ df. group by(“Destination”).sum(). n largest(10,columns = “T Count”)

Pyspark Code

Spark. df. group by(“Destination”).sum(). ordered by (“sum(T Count)”,ascending=False).limit(10)

Koalas Code

- Koalas_df.groupby(“Destination”).sum().nlargest(10,columns = “TCount”)

- A word of caution we always need to make sure that the data is ordered using the sort index because being a distributed computing environment we do not know which order the data comes in.

PANDAS to KOALAS some useful tips

- Conversions between the two types of data frames are done very seamlessly.

- koalas _df. to_ spark() we can use this transformation and our data frame over to the. Pysark expert maybe the deployment team and pyspark_df.to _koalas().

- Almost all popular Pandas ops available in Koalas.> 70%.Visualization support via matplotlib. The community is accepting missing ops which could be done via the Gihub page of Koalas.

- Some of the functionalities are explicitly not chosen to be implemented such as Data frame value because all data might be loaded into the drivers’ memory giving us out-of-memory exceptions. The easiest workaround is koalas_df. to_pandas() do your manipulations pandas_df. to_ koalas().

- Different execution principles we need to be aware of such as ordering, lazy evaluation,and underlying Spark df, sort after group by, and different structures of a group by. apply, different NAN treatments.

- For distributed row-based jobs you could use koalas_ df. apply(,axis=1) this makes sure that all the function calls over all the rows are distributed among all the Spark workers.

- koalas _df .cache() will not recompute from the beginning every time which could be leveraged for exploratory data analysis.