Want to the Bring Reliability & Performance to your Cloud Data Lakes? Why not try Databricks Delta

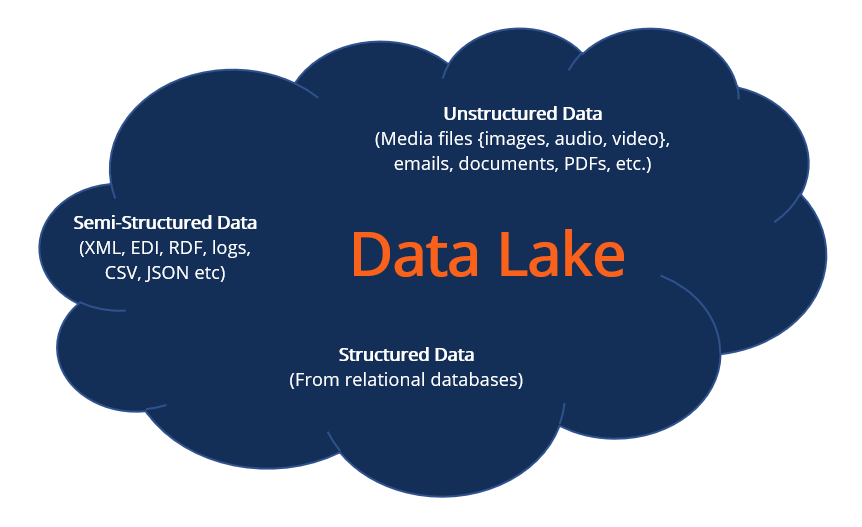

Organizations have taken on ambitious project to respond to their ever growing data volumes by adopting Cloud Data Lakes as a go to place to collect their data ahead of making it available for analysis.

Cloud Data Lakes was supposed to be cheap and scalable but it comes with its own set of challenges

- No Atomicity- means failed production jobs leave data in corrupt state requiring tedious recovery.

- Without our current system giving us any help.

- And no schema enforcement means data created could be inconsistent and un usable.

- No consistency/isolation makes it almost impossible to mix appends and reads or batch and stream.

- Updates to Data Lake- There is no native support.

However we can manage the above Cloud Data Lakes but it calls for a complex architecture

- Say for solving point 3 above we can implement the LAMBDA architecture where we can have two pipelines.

- simultaneously one catering to low latency streaming data and one for batch data meant to archive the data so that it could be used for AI and reporting purposes and historical queries.

- Now imagine the data in the upstream system changes so we need to add validations to make sure assumption about data is correct in both the above said pipelines.

- Similarly for handling point 1 above we need to keep a look at the errors and need to compute the whole table to make it even more efficient.

- we can partition the data to the most granular level so that in case of errors we could just delete and re create only the partitions where the error occurred.

- How do you think point 4 could be handled?

- we need to write new spark job to modify the data and prevent users from accessing the Cloud Data Lake during the updates.

- Implementing above complex architecture means we spend more time on system problems rather than trying to get insights from the system.

- If i case we ignore the above challenges our Data lake turns into Data swamps and as we all know “a model is as good as data”(garbage in leads to garbage out).

Do we have a rather simple solution to the above problems?Cloud Data Lakes

Delta Lake:

- Yes the creators of Apache Spark have provided us with Delta Lake an open source and open standard framework.

- Databricks Delta a component of the Databricks Unified Analytics Platform is a unified data management system that brings unprecedented reliability.

- And performance (10–100 times faster than Apache Spark on Parquet) to cloud data lakes.

- Designed for both batch and stream processing, it also addresses concerns regarding system complexity.

Cloud Data Lakes advanced architecture enables high reliability and low latency through the use of techniques.

Such as schema validation, compaction, data skipping, etc. to address pipeline development, data management and as well query serving.

Delta Lake provides opportunity for a much simpler analytics architecture able to address both batch and stream use case with high query performance.

- using Data Indexing, Data Skipping, Compaction and Data Caching will talk separately about these terminologies.

- In future write ups and high data reliability.

- Cloud Delta Lake brings Acid transaction in distributed systems with performance as analyzed data is full acid.

- Everything shall be scalable and transactional.

Two people see consistent snapshots of the data using Cloud Delta Lake as it guarantees sequence snapshots data once written idefinitely written .

For simplicity Cloud Delta Lake is just a directory on AWS S3,Blob store or a file system with bunch of parquets along with transaction logs(different table versions).

We sill store data in partition directory for debug to happen later

Delta Lake on File System

Delta Lake on File System

Transaction logs has table versions which ideally are result set of actions(Change Meta data, Add a file, Remove a file).

Applied on a table and tables current state is result of all set of actions.

Using the transaction logs we could time travel on a tables historical states.

- Getting started with Databricks Delta.

- Add the spark package- pyspark — packages io.delta:delta-core_2.12:0.1.0.Porting your existing Spark code for using Delta is as simple as changing.

- “CREATE TABLE … USING parquet” to “CREATE TABLE … USING delta” or changing “dataframe. write. format(“parquet”).load(“/data/events”)” “dataframe. write. format(“delta”).load(“/data/events”)” Want to learn more give it a try https://delta.io/.