Data Clean Rooms

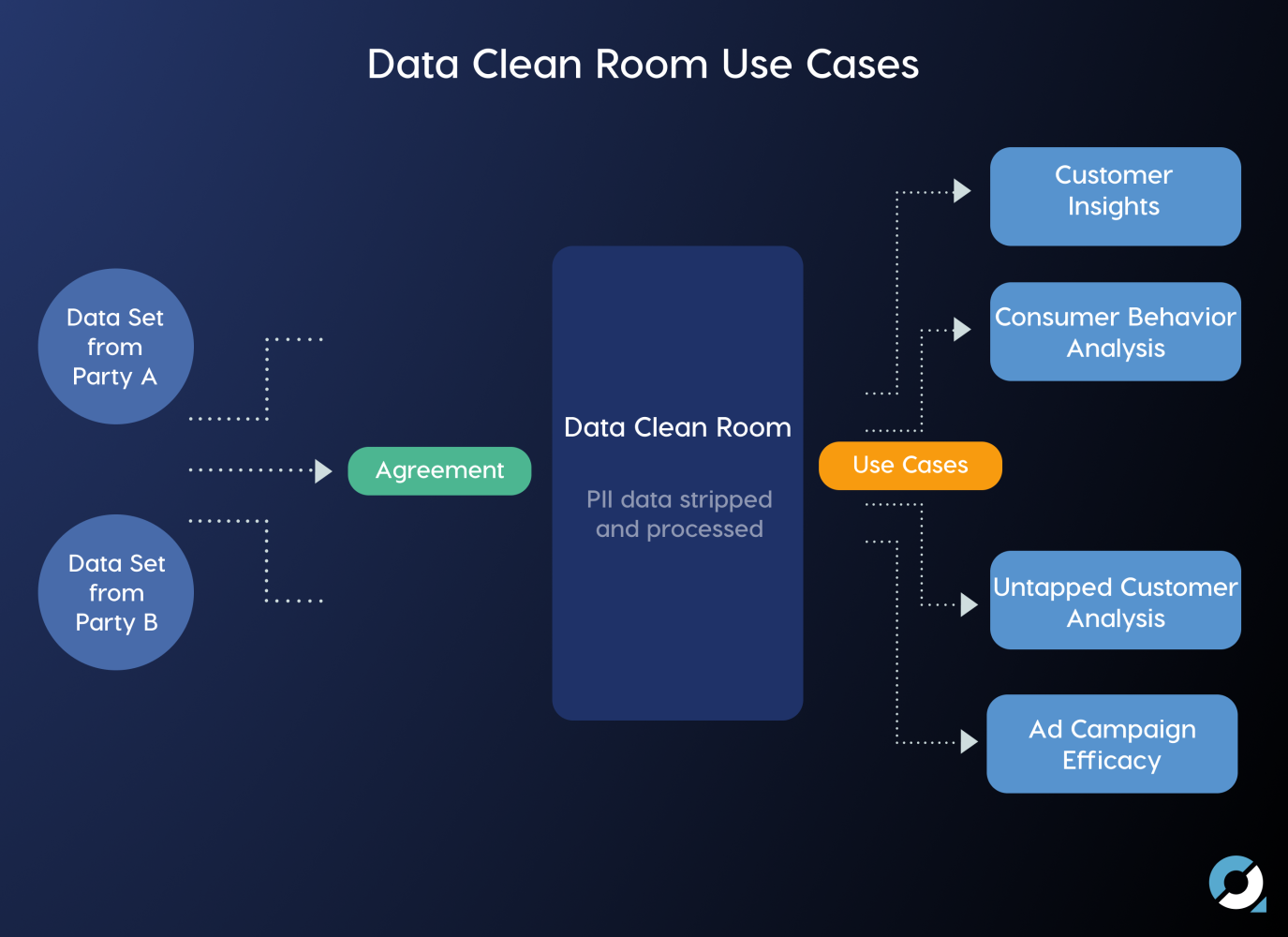

Data Clean Rooms (DCRs) are secure environments that enable multiple organizations (or divisions of an organization) to bring data together for joint analysis under defined guidelines and restrictions that keep the data secure.

- These guidelines control what data comes into the clean room.

- How the data within the clean room can be joined with other data in the clean room.

- The kinds of analytics that can be performed on the clean room data, and what data-if any-can leave the clean room environment.

- Traditional Data Clean Rooms (DCR) implementations require organizations to copy their data to a separate physical location.

- Databricks Lakehouse Cleanroom announced in June 2022 allows businesses to easily collaborate with their customers.

- Partners on any cloud in a privacy-safe way.

- Participants in the data cleanrooms can share and join their existing data.

- And run complex workloads in any language-Python, R, SQL, Java, and Scala-on the data while maintaining data privacy.

- Historically, organizations have leveraged data sharing solutions to share data with their partners and relied on mutual trust to preserve data privacy.

- But once data shared organizations have little or no visibility into how data is consumed by partners across various in platforms.

Data Clean Rooms Rapidly changing security, compliance, and privacy landscape

- This exposes potential data misuse and data privacy breaches.

- Data privacy regulations and changes in third-party measurement, have transformed how organizations collect, use.

- Share data, particularly for advertising and marketing use cases.

- As these privacy laws and practices evolve Organizations will try to find new solutions.

- As these privacy laws and practices evolve Organizations will try to find new solutions.To join data with their partners in a privacy-centric way to achieve their business objectives in the cookie-less reality.

Collaboration in a fragmented data ecosystem:

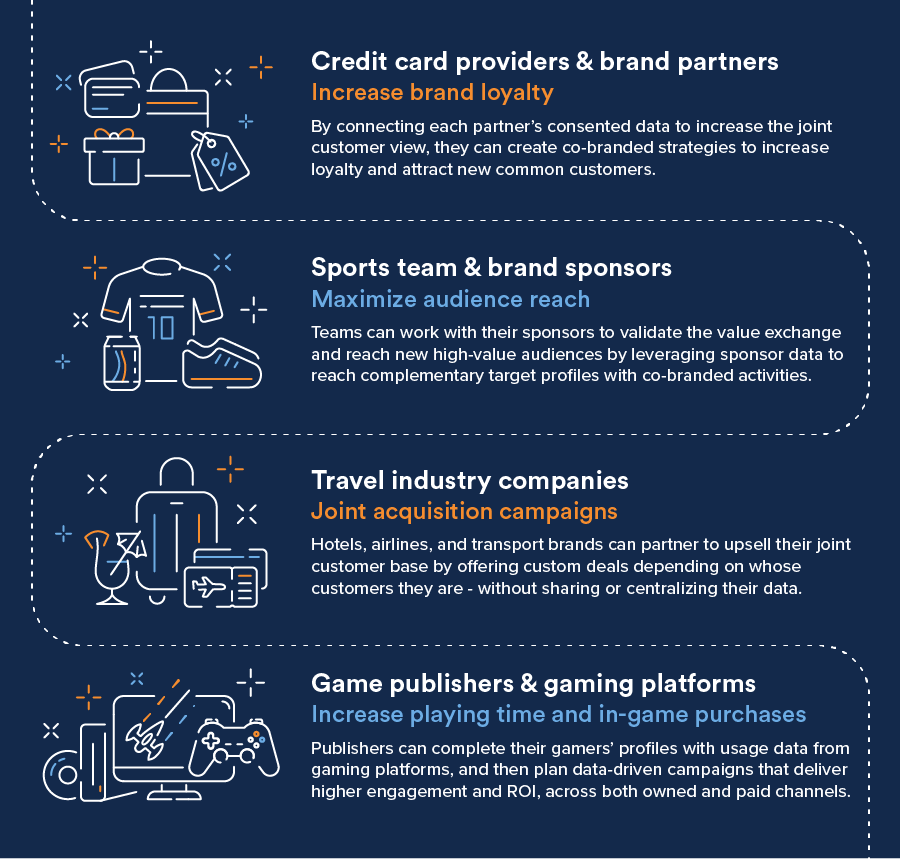

- The digital footprint is consumers an fragmented across different platforms.

- Necessitating that companies collaborate with their partners to create a unified view of their customers’ needs and requirements.

- To facilitate collaboration across organizations, cleanrooms provide a secure.

- Private way to combine their data with other data to unlock new insights or capabilities.

New ways to monetize for Data Clean Rooms:

- Companies will try to find any possible advantages to monetize their data without the risk of breaking privacy rules.

- This creates an opportunity for data vendors or publishers to join data for big data analytics without having direct access to the data.

Problems with the existing Data Cleanroom solutions

Data movement and replication :

- Data Clean Rooms vendors require participants to move their data into the vendor platforms, which results in platform lock-in and add data storage cost to the participants.

- Participants spend lot of time to prepare the data in a standardized format before performing any analysis on the aggregated data.

- Data has be an replicated across multiple cloud and regions to facilitate collaborations with participants on different clouds which comes with operational cost.

Restricted to SQL:

- SQL an powerful and absolutely needed for cleanrooms, there are times when you require complex computations.

- Such as machine learning, integration with APIs, or other analysis workloads where SQL just won’t cut it.

Hard to scale:

- With collaboration limited to just two participants, organizations get partial insights on one cleanroom platform.

- And end up moving their data to another cleanroom vendor, incurring the operational overhead of manually collating partial insights.

Data Cleanroom Databricks lakehouse platform

- With collaboration limited to just two participants, organizations get partial insights on one cleanroom platform.It provides a comprehensive set of tools to build, serve, and deploy a scalable and flexible data cleanroom based on your data privacy and governance requirements.

Secure data sharing with no replication:

- Secure data sharing with no replication with Delta Sharing, cleanroom participants can securely share data from their data lakes.

- With other participants without any data replication across clouds or regions.

- Your data stays with you and it is not locked into any platform.

Full support to run arbitrary workloads and languages:

- Flexibility to run any complex computations such as machine learning or data workloads in any language – SQL, R, Scala, Java, Python – on the data.

Easily scalable with guided on-boarding experience:

- Lakehouse Cleanroom is easily scalable to multiple participants on any cloud or region.

- It is easy to get started and guide participants through common use cases using predefined templates (e.g., jobs, workflows, dashboards), reducing time to insights.

Privacy-safe with fine-grained access controls:

- Privacy-safe with fine-grained access controls with Unity Catalog, you can enable fine-grained access controls on the data and meet your privacy requirements.

- Integrated governance allows participants to have full control over queries or jobs that can be executed on their data.

- All the queries or jobs on the data are executed on Databricks–hosted trusted compute.